Noah Lee

I am an LLM Researcher of the Kanana Team of Kakao, where I work on post-training LLMs. Previously, I graduated my Master’s degree at the Kim Jaechul Graduate School of AI of KAIST, jointly advised by James Thorne and Jinwoo Shin.

My current main research interest lies in (but not confined to):

- Bettering human representation of LLMs

- Incuding test-time alignemnt and/or controllability of LLMs

- Personalization & customization of LLMs

Feel free to get in touch!

News

| Sep 15, 2025 | I joined the Kanana Team of Kakao Corp. to work on LLM post-training. |

|---|---|

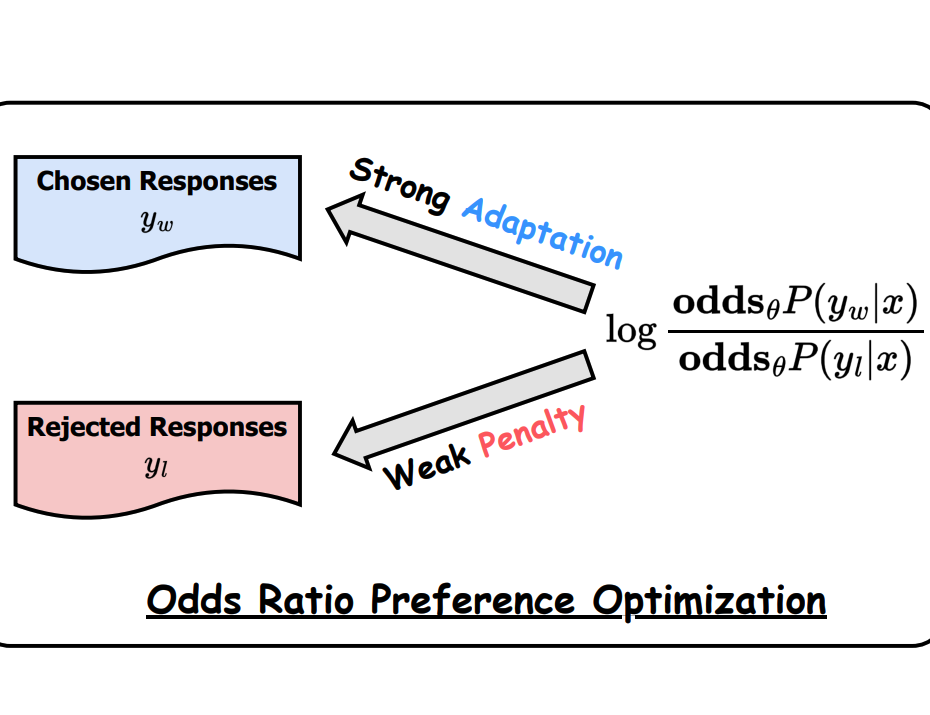

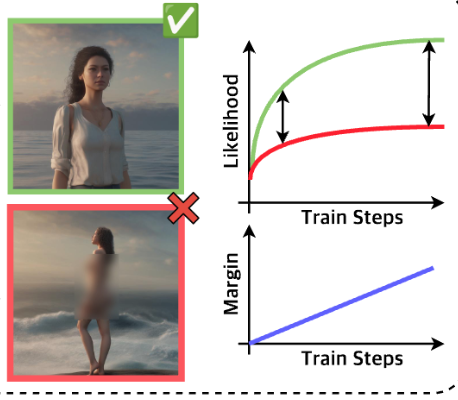

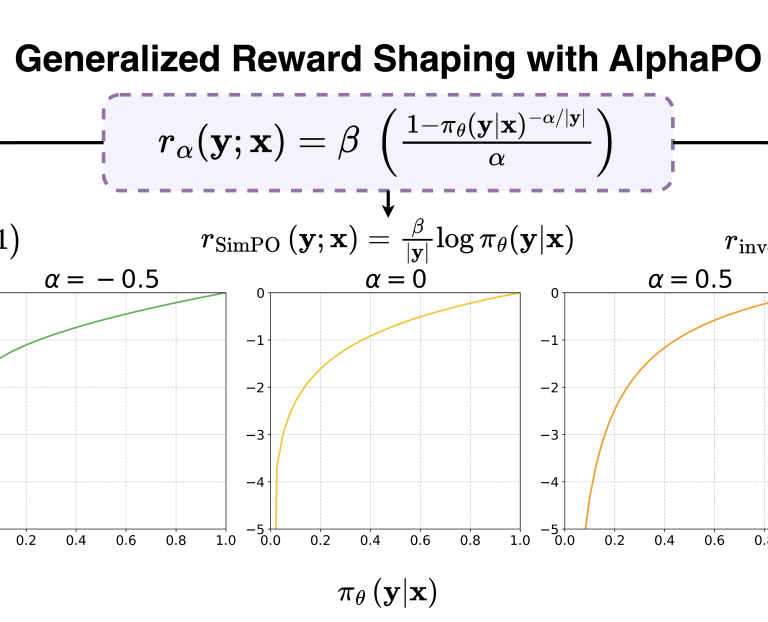

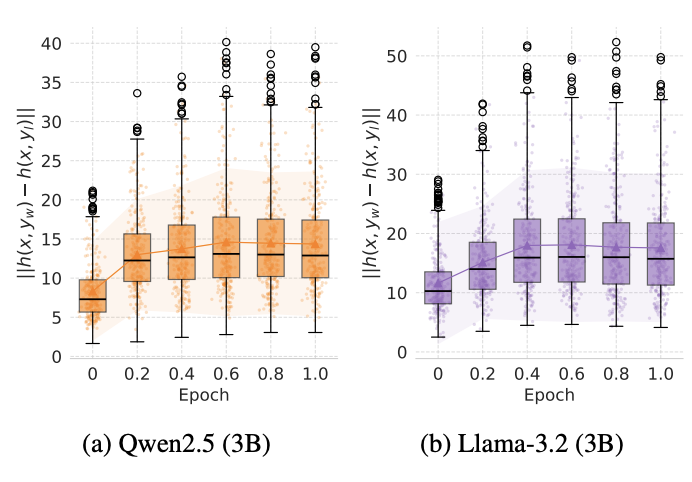

| Jul 1, 2025 | Two papers (Robust RM & AlphaPO) have been accepted to ICML 2025! |

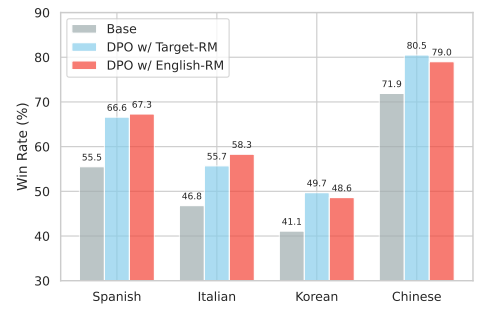

| Jan 24, 2025 | Our RM cross-lingual paper has been accepted to NAACL 2025! |

| Dec 2, 2024 | A paper has been accepted to COLING 2025 |

| Oct 30, 2024 | Check out our new preprint on cross-lingual transfer of reward models! |

Publications

-

-

-

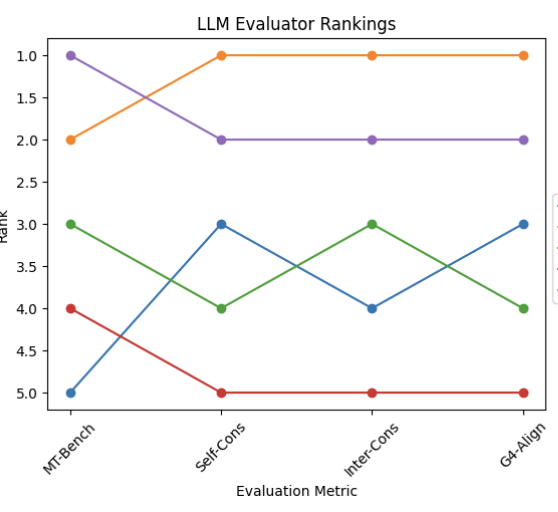

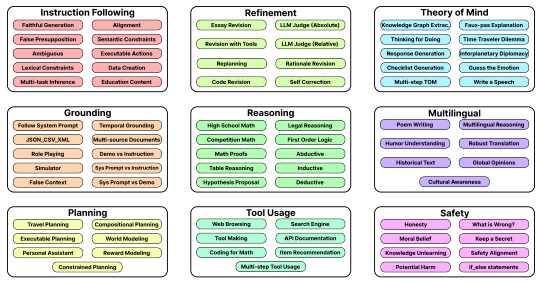

The BiGGen Bench: A Principled Benchmark for Fine-grained Evaluation of Language Models with Language ModelsNAACL (Best Paper), 2025

The BiGGen Bench: A Principled Benchmark for Fine-grained Evaluation of Language Models with Language ModelsNAACL (Best Paper), 2025 -